Space-Time Guided Association Learning For Unsupervised Person Re-Identification

Abstract

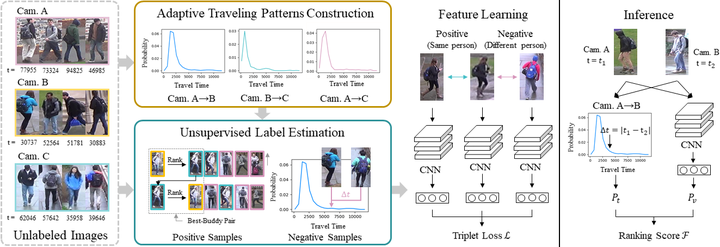

Person re-identification (Re-ID) aims to match images of the same person across distinct camera views. In this paper, we propose the Space-Time Guided Association Learning (STGAL) for unsupervised Re-ID without ground truth identity nor image correspondence observed during training. By exploiting the spatial-temporal information presented in pedestrian data, our STGAL is able to identify positive and negative image pairs for learning Re-ID feature representations. Experiments on a variety of datasets confirm the effectiveness of our approach, which achieves promising performance when comparing to the state-of-the-art methods.

Type

Publication

IEEE International Conference on Image Processing

Date

October, 2020